Everything You Need to Know About ChatGPT Model 4o

ChatGPT Model 4o is OpenAI’s latest AI tool, designed for speed and versatile input and output, with enhanced capabilities for handling text, images,

Imagine you’re exploring a vast museum filled with exhibits on every topic imaginable. Now, picture a guide who can effortlessly explain each exhibit, answer any question, and even engage in a conversation about your favorite topics. That’s what ChatGPT Model 4.0 (GPT-4o) is like a super-intelligent assistant that can handle text, audio, images, and even videos, providing seamless and dynamic interactions. Let’s delve into how this fascinating technology works and why it’s revolutionary.

What is ChatGPT Model 4o?

ChatGPT Model 4.0, affectionately known as GPT-4o, is like a brainy best friend who excels at understanding and generating text, audio, and images in real-time. It’s what we call an “omni” model, meaning it can seamlessly integrate various modalities to provide dynamic and intuitive interactions. Think of it as a supercharged version of your favorite virtual assistant, equipped with the ability to comprehend and respond to your queries across different formats.

How Does ChatGPT Work?

Let’s peel back the layers and uncover the inner workings of GPT-4o:

1. Training on Diverse Data

GPT-4o is like a diligent student who has devoured a vast library of knowledge. It has been trained on a plethora of datasets, spanning diverse topics and languages. From books and articles to videos and audio recordings, GPT-4o has absorbed a wealth of information, allowing it to understand and generate content across a wide spectrum of subjects.

2. Understanding Multimodal Inputs

Imagine juggling multiple tasks simultaneously explaining a painting, describing background music, and reading out a text description. GPT-4o does just that, seamlessly processing text, audio, and images all at once. It’s like having a multitasking maestro who can effortlessly weave together different inputs to provide a coherent and comprehensive response.

3. Generating Contextual Responses

When you interact with GPT-4o, it’s not just about the words you say — it’s about the context in which you say them. Whether it’s a series of text messages, a spoken query, or a visual prompt, GPT-4o takes into account the context of your inputs to generate responses that are not only accurate but also relevant. It’s like having a conversation with a friend who truly understands where you’re coming from.

4. Real-Time Processing

Speed matters, especially when it comes to AI-driven interactions. GPT-4o boasts lightning-fast processing speeds, responding to audio inputs in as little as 232 milliseconds. That’s almost as quick as a human conversation! This real-time processing ensures smooth and engaging interactions, making your interactions with GPT-4o feel seamless and natural.

Real-World Applications

Now, let’s take a peek into the real-world applications of GPT-4o:

Enhanced Customer Support

Ever wished customer service could be more efficient and personalized? With GPT-4o, it can be. Picture contacting customer support and receiving instant, context-aware responses — not just via text but also through voice and images. GPT-4o has the potential to revolutionize customer support by providing multi-channel, real-time assistance tailored to your needs.

Creative Content Creation

Are you a content creator in search of inspiration? Look no further than GPT-4o. Whether you need help generating text, composing music, creating artwork, or producing videos, GPT-4o is your creative companion. It’s like having a multi-talented collaborator who’s always ready to lend a hand and fuel your creative endeavors.

Education and Learning

Learning should be engaging, interactive, and accessible to all. That’s where GPT-4o comes in. Whether you’re a student grappling with complex concepts or an educator looking for innovative teaching tools, GPT-4o can assist. From explaining concepts through text, diagrams, and spoken explanations to providing personalized tutoring sessions, GPT-4o is like having a knowledgeable mentor by your side every step of the way.

Accessibility and Inclusion

In a world where accessibility is paramount, GPT-4o shines as a beacon of inclusivity. Its multimodal capabilities make information more accessible to everyone — whether it’s converting text to speech for the visually impaired, describing images for those with sight impairments, or translating spoken language into text for language learners. With GPT-4o, information knows no barriers.

Model Evaluations

Let’s delve deeper into GPT-4o’s performance in various benchmarks:

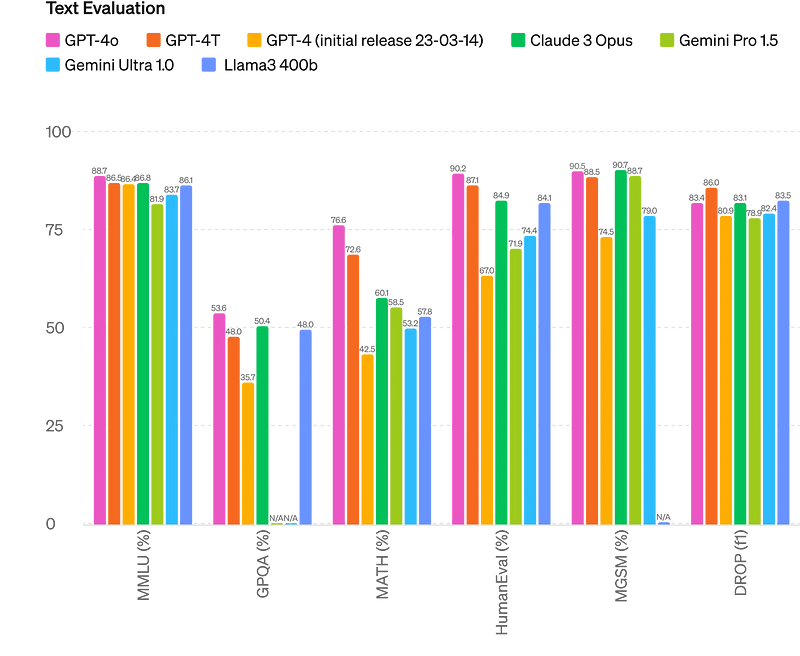

Text Evaluation

GPT-4o achieves GPT-4 Turbo-level performance in text comprehension, reasoning, and coding intelligence. It sets a new high score of 88.7% on the zero-shot Chain of Thought (COT) MMLU, which tests general knowledge questions, and an impressive 87.2% on traditional 5-shot no-CoT MMLU. This means it not only understands complex text but can also reason and provide accurate responses, showcasing its prowess in natural language understanding. Bencharm according to the OpenAI.

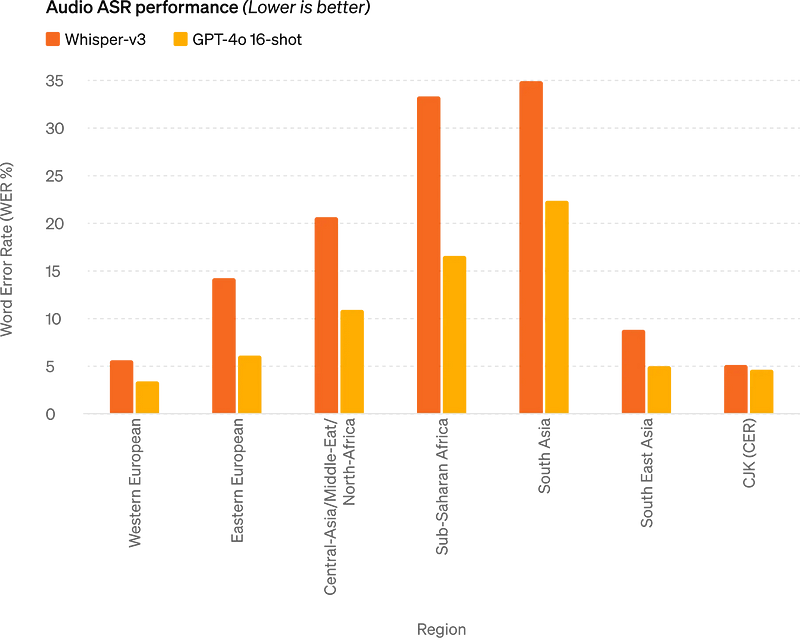

Audio ASR Performance

GPT-4o significantly improves speech recognition performance, especially for lower-resourced languages, surpassing Whisper-v3 across all languages. This means it’s better at understanding and transcribing spoken language accurately, making it a reliable companion for tasks that involve audio inputs. Bencharm according to the OpenAI.

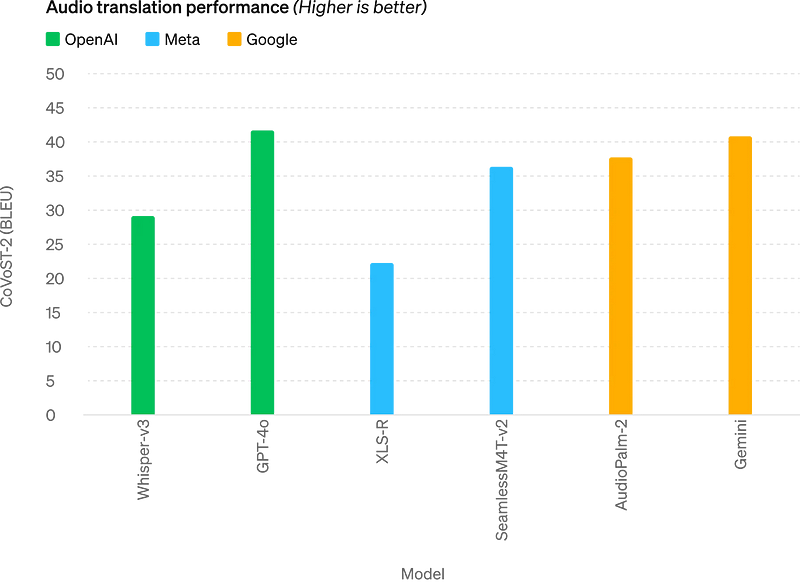

Audio Translation Performance

In audio translation, GPT-4o sets a new state-of-the-art by outperforming Whisper-v3 on the MLS benchmark, showcasing its strength in translating spoken language across different languages. This makes it an invaluable tool for tasks that require translation of spoken content, ensuring accurate and contextually appropriate translations. Bencharm according to the OpenAI.

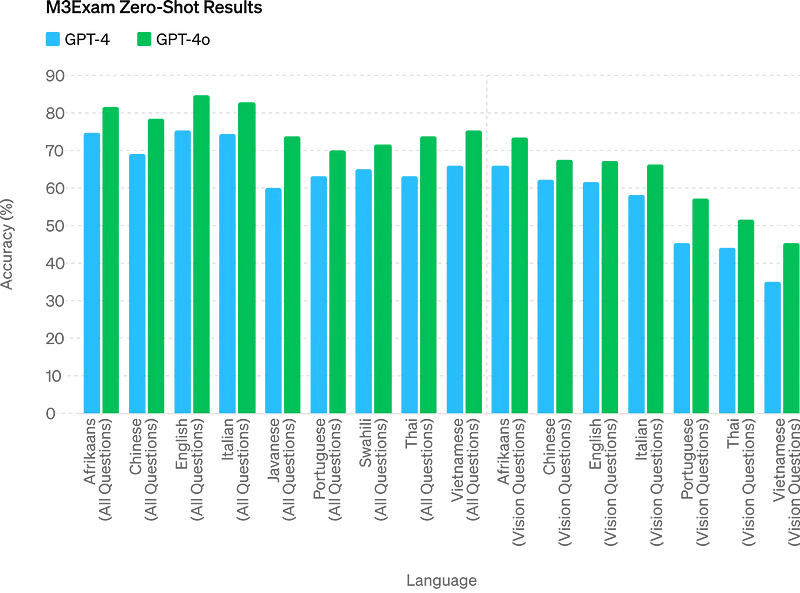

M3Exam Performance

The M3Exam benchmark evaluates multilingual and vision capabilities through multiple-choice questions, sometimes including figures and diagrams. GPT-4o outperforms GPT-4 in this benchmark across all languages, demonstrating its superior multilingual and visual understanding. This means it excels not only in text comprehension but also in understanding visual content, making it a versatile model for a wide range of tasks. Bencharm according to the OpenAI.

Language Tokenization

GPT-4o introduces a new tokenizer that reduces the number of tokens required to represent text, improving efficiency. For example, it uses 4.4x fewer tokens for Gujarati and 1.1x fewer for English, making it more efficient in handling various languages. This enhances its performance and scalability, ensuring it can handle large volumes of text efficiently.

Why is GPT-4o Special?

Comprehensive Capabilities

GPT-4o’s ability to handle text, audio, and images simultaneously makes it exceptionally versatile. Whether you’re engaging in a text-based conversation, listening to audio content, or analyzing visual data, GPT-4o has you covered.

Improved Performance

Compared to its predecessors, GPT-4o offers superior performance across various modalities and languages. Its advancements in text comprehension, speech recognition, and visual understanding set a new standard for AI models, ensuring high-quality interactions and accurate responses.

Accessibility and Affordability

OpenAI has made GPT-4o more accessible and affordable, with significant improvements in cost and speed. This ensures that more people can benefit from this cutting-edge technology, democratizing access to advanced AI capabilities.

Conclusion

GPT-4o is a groundbreaking advancement in AI technology, offering unparalleled capabilities in text, audio, and visual processing. Whether you’re seeking assistance with customer support, content creation, education, or accessibility, GPT-4o is your ultimate companion. Its versatility, performance, and accessibility make it a game-changer in the world of artificial intelligence, unlocking new possibilities and transforming the way we interact with technology.